My NixOS Journey Part 3

Part One | Part Two | Part Three

Welcome to my journey. I'm documenting how I make my way down the rabbit hole, failures, successes, lessons learned all in hopes that I don't do it again, and maybe you'll be able to take away something from this too.

Where to discuss this article or contact me

Matrix:

#my-nixos-journey:beardedtek.com

#nixnerds:jupiterbroadcasting.com

@beardedtek:beardedtek.com

Telegram:

@beardedtek

Twitter:

@beardedtek

Email:

contact@beardedtek.com

This Article's GitHub Repo

https://github.com/beardedtek/My-Nix-OS-Journey

Bare Metal At Last

The time has come to put NixOS on my Dell T410 Server I have dubbed Triton. Although it may be an older machine, it still has a lot of kick left to it:

- 2 Intel Xeon E5630 @ 2.53GHz (16 cores)

- 64GB DDR3 ECC RAM

- 500GB SATA SSD

- 2x Broadcom NetXtreme II BCM5716 Gigabit Ethernet

- Matrox MGA G200eW Graphics Card

- Nvidia GTX-1080 GPU

I previously had this running ROCKstor, a btrfs based NAS based on openSUSE Leap 15.3. It ran absolutely solid for nearly 2 years, but it is based on python 2.7 and became slower as time went on.

If you're in the market for a community run lightweight NAS I really suggest you check it out: https://rockstor.com

Initial Requirements

- Basic System

- Docker Compose

- Proprietary Nvidia driver

- Nvidia in Docker

Services I Want to Run

- Traefik

- Authelia

- Pihole

- Netbootxyz

- Minetest

- Plex

- OverSeerr

The NixOS Way or My Way

The NixOS is nothing like other distros, but also just like every other distro at the same time. By this I mean NixOS has it's own way to do things, similar to each distro. Whether it be apt, snaps, YaST, dmg, zypper, or the AUR each distro puts its own spin on things. Technologies like docker, podman, and kubernetes makes each individual distro compatible on a way they never have been before. Although NixOS can run these services natively, I want to run them using docker compose so that I can move them between systems running different distros with docker installed.

Build it Up

As shown in part 2, I've started splitting up my configuration files to make them easier to manage. As shown below, I've added a folder named triton with the configuration files that differ from our base config.

install.sh

config/

|- boot.nix

|- configuration.nix

|- disks.nix

|- network.nix

|- packages.nix

|- services.nix

|- users.nix

|- triton/

|- disks.nix

|- docker.nix

|- hardware-configuration.nix

|- network.nix

|- packages.nix

|- services.nix

|- secrets/

|- pw_user

|- pw_root

|- sshkey_beardedtek

Filesystems

Here we build upon our base btrfs filesystem and add NFS mounts to access my media, and shared docker storage form my NAS. In production this should never be done, but this is for my homelab where I don't have the resources to mirror data between servers.

To add an NFS mount, simply add the following under filesystems:

"/path/to/mount" = {

device = "server.address:/path/to/share";

fsType = "nfs";

disks.nix

{

fileSystems = {

"/".options = [ "compress=zstd" ];

"/home".options = [ "compress=zstd" ];

"/nix".options = [ "compress=zstd" "noatime" ];

"/nas/media" = {

device = "192.168.2.35:/mnt/media";

fsType = "nfs";

};

"/nas/Backup" = {

device = "192.168.2.35:/mnt/Backup";

fsType = "nfs";

};

"/usr/local/docker" = {

device = "192.168.2.35:/mnt/SSD/docker";

fsType = "nfs";

};

"/usr/local/data" = {

device = "192.168.2.35:/mnt/Backup/docker/data";

fsType = "nfs";

};

"/usr/local/data/immich" = {

device = "192.168.2.35:/mnt/SSD/immich";

fsType = "nfs";

};

};

}

Define Services

Here is where We will setup our native NixOS services.

- openssh for remote cli access

- We will disallow root login and password authentication.

- Xfce and Xrdp for lightweight GUI access

- This is not necessary, but since I'm experimenting I might as well try it out.

- docker for running most of our other services

- While I can run almost all my services "The Nix Way", I do run other distros and it becomes easier to shift services to another host if I keep it stock docker-compose.

services.nix

{

virtualisation = {

docker = {

# Enable Docker

enable = true;

enableNvidia = true;

# Auto prune Docker resources weekly

autoPrune = {

enable = true;

dates = "weekly";

};

}

};

# Enable Services

services = {

# Enable openssh

openssh = {

enable = true;

settings = {

PasswordAuthentication = false;

PermitRootLogin = "no";

};

};

};

# Setup XFCE for use with xrdp

xserver = {

desktopManager = {

xterm.enable = false;

xfce.enable = true;

};

displayManager = {

defaultSession = "xfce";

};

};

# Setup xrdp

xrdp = {

enable = true;

defaultWindowManager = "startxfce4";

openFirewall = true;

};

};

}

Nvidia Setup

This was the most cryptic part of my setup. There is not a good source of documentation that clearly explains the process.

If you google "nixos nvidia" you get a lot of confusion including posts like this from 5 months ago, this from 6 months ago, this from 2 years ago, and this

The official documentation on nixos.wiki is pretty good if you're only looking to setup X or Wayland, but it does not explain how to set an nvidia card up for compute and hardware video acceleration.

Finally if you go to the CUDA documentation on nixos.wiki you get a little bit closer, but even this page has a disclaimer to look directly at the github source which is not so user friendly.

If you're like me and want to setup for Plex transcoding, or for use with Frigate NVR or Immich, there is a simple, straightforward way to do this.

My system has a simple built in Matrox graphics card. Nothing fancy, but enough to run a simple GUI which means I can completely offload my Nvidia GPU using Prime Offload. This is ideal if you have a 16 series or newer Nvidia card as it can completely power the card down when its not being used. For my 1080, however it still works pretty well as it only sips 5W at idle. Not too shabby.

Included in gpu.nix is basic configuration for nvidia driver setup, vaapi, nvidia docker support, and a few packages specific to GPU monitoring including:

gpu.nix

{ config, pkgs, ... }:

{

# We must allow unfree packages to use the nvidia driver

nixpkgs.config.allowUnfree = true;

# Enable nvidia driver

hardware = {

# Enable 32 Bit DRI Support

opengl = {

enable = true;

driSupport = true;

driSupport32Bit = true;

};

nvidia = {

modesetting.enable = true;

# Enable prime offload as the Nvidia card is our secondary gpu

prime = {

offload = {

enable = true;

enableOffloadCmd = true;

};

intelBusId = "PCI:7:3:0";

nvidiaBusId = "PCI:6:0:0";

};

};

};

# In order to build the proprietary nvidia driver, we MUST enable

# X and define nvidia as the video driver.

services.xserver = {

enable = true;

videoDrivers = ["nvidia"];

};

# Some packages specific to using my Nvidia card

environment.systemPackages = with pkgs; [

# Add nvidia-container-runtime, nvidia-container-runtime-hook,

# nvidia-toolkit, docker, nvidia-docker, crio,

# nvidia-container-cli, containerd, & nvidia-container-toolkit

nvidia-docker

# Add VA-API implemention using NVIDIA's NVDEC

nvidia-vaapi-driver

# CLI system monitoring for GPU compute

nvtop-nvidia

# CLI System Monitor with charts and GPU stats

zenith-nvidia

];

}

Networking

The only things we will modify from our base config is our hostname and some specific firewall ports we want to open up.

network.nix

{

# Setup Networking

networking = {

hostName= "triton";

enableIPv6 = false;

firewall = {

enable = true;

allowedTCPPorts = [

22 # ssh

80 # traefik

443 # traefik

32400 # plex

5000 # Frigate

9000 # For testing web apps

];

};

};

}

System Packages

We are adding a few packages to make setting things up and troubleshooting easier. One that I haven't seen too many other places is nethogs. It's nice to have a display of what process is using network bandwidth. Also, hwinfo is a great utility from the SUSE ecosystem that allows easy access to system hardware information.

packages.nix

{ pkgs, ... }:

{

environment.systemPackages = with pkgs; [

nano

wget

docker

ipmitool

hwinfo

htop

nethogs

];

}

Once all this is set, I boot the NixOS Minimal Install, clone my Nix repo and run my install script and BOOM!

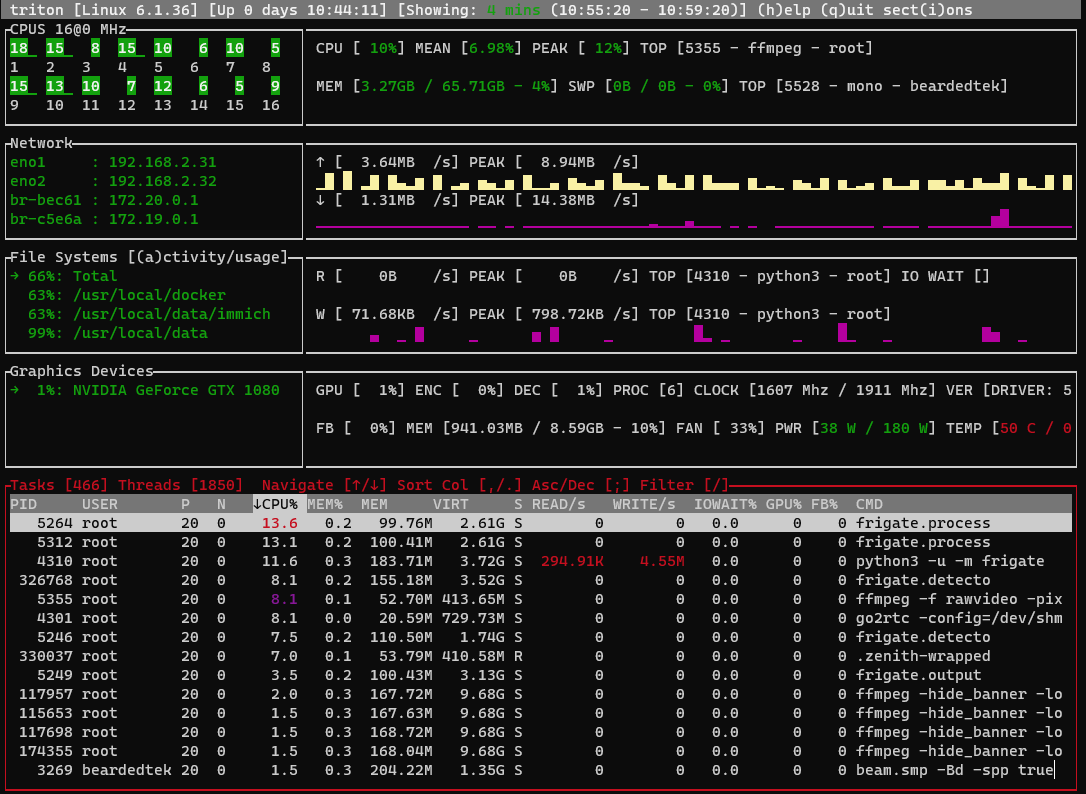

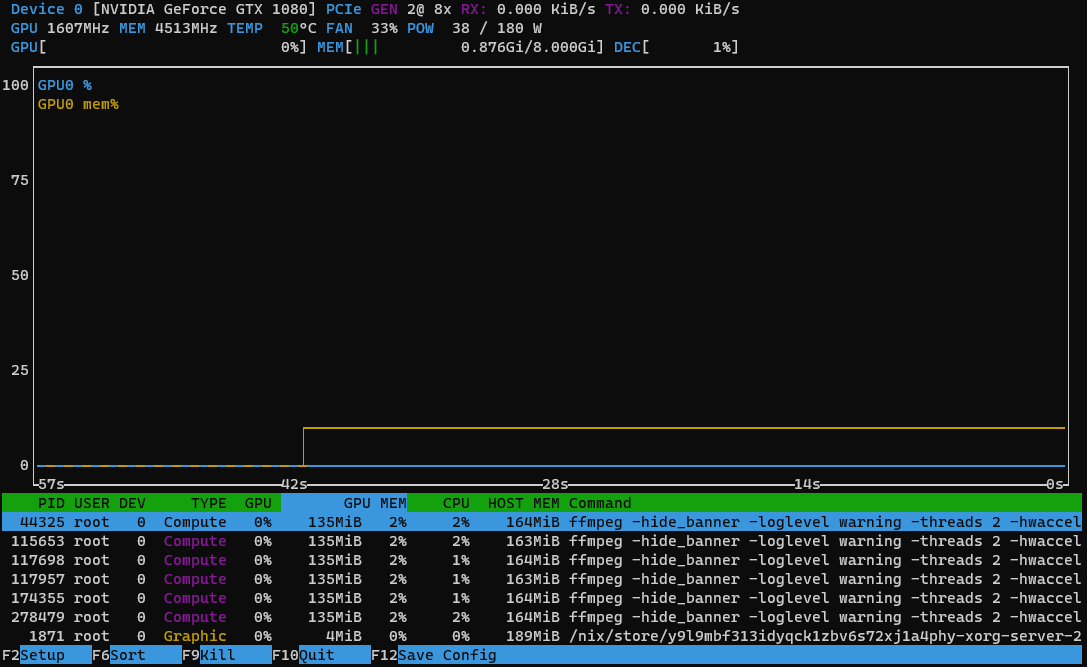

You will notice in this looping gif that inside my frigate container nvidia-smi does not show any processes. This is a limitation of docker and is normal behaviour. You'll see the 6 ffmpeg processes which are all 6 of my camera feeds being consumed by frigate.

Success!

As you can see, we have a working nvidia card being used for video decoding/encoding inside docker! Simple and easy. I hope this was helpful to you, if it was, please feel free to drop a line, subscribe to my blog, or even send a donation via PayPal to encourage me along. Honestly, knowing others find this useful drives me to do more.

Please feel free to join me in discussing what you think I could improve, what I did wrong, if it helped you, or if you need some extra help using the contact information at the beginning of this article.